Overview

Before I get too far ahead in this series, I am going to pause quickly on the networking side and discuss Identity Access Management (IAM). IAM is a critical component of AWS and will play into future blog posts.

I will not be discussing security architecture around IAM.

For all other posts in this series see: AWS Security Overview Table of Contents

Let's begin...

NOTE: For any definitions, I am going to use it more or less verbatim from the AWS documentation. Their documentation is robust and usually pretty current. So rather than citing everything, just assume I have gotten it directly from the respective AWS documentation.

Identity Access Management (IAM)

Let me first start off by saying, if you really want to understand IAM you should read the 689 page IAM User Guide. It's your friend. It's also filled with labs that you can do on your own.

If you don't want to read the entire User Guide at least take a quick look at the IAM Features so you know what features IAM provides.

At the end of the day, identity and access management is a very important requirement. It's also included in every compliance standard I personally know of and if you're using AWS, you will use IAM.

Definition

Amazon's definition is, "AWS Identity and Access Management (IAM) is a web service that helps you securely control access to AWS resources for your users. You use IAM to control who can use your AWS resources (authentication) and how they can use resources (authorization)."

It's centralized and allows for fine grained controls. Within IAM you have; Users, Groups, and Roles.

IAM API

More technical, but another required reading should be the 425 page IAM API documentation (and the API docs for other AWS services) as well.

Every action within AWS is an API call. As a result, the API documentation seems like a logical place to begin when you want to start building alerting, monitoring and auditing around IAM. It's also an ideal starting point for creating IAM policies.

I would suggest first meeting with your governance team and figure out what kind of actions you must monitor at a minimum, then go from there creating neat correlation rules.

Consider this entry from the PCI DSS Effective Daily Log Monitoring document.

10.2.5 Use of and changes to identification and authentication mechanisms—including but not limited to creation of new accounts and elevation of privileges—and all changes, additions, or deletions to accounts with root or administrative privileges

As an example, let's say an admin wanted to gain access to billing information for some reason. They would need to attach a Billing IAM policy to their account.

This action would make an API call to AttachUserPolicy. This would be the eventName in the log.

An example of the log file would look like this; however, I did remove a few things for privacy and brevity.

{

"eventVersion": "1.02",

"userIdentity": {

"type": "IAMUser",

"arn": "arn:aws:iam::3xxxxxxxxxx8:user/polsen",

"userName": "polsen",

"sessionContext": {

"attributes": {

"mfaAuthenticated": "false",

"creationDate": "2017-11-07T21:18:20Z"

}

},

"invokedBy": "signin.amazonaws.com"

},

"eventTime": "2017-11-07T21:18:41Z",

"eventSource": "iam.amazonaws.com",

"eventName": "AttachUserPolicy",

"awsRegion": "us-east-1",

"sourceIPAddress": "76.26.x.x",

"userAgent": "signin.amazonaws.com",

"requestParameters": {

"userName": "polsen",

"policyArn": "arn:aws:iam::aws:policy/job-function/Billing"

}

So now you could setup alerting on this eventName, and the policyArn of, "Billing". Or prevent it altogether. Or if you don't want to prevent, you could take action to remove the policy from said user automatically (via code) when an alert triggers.

In either case, there are a myriad of API calls for each of the AWS services, which is why your team needs to understand them in detail.

I will revisit APIs at a later time.

AWS Access

There are multiple ways to access your AWS account and/or its resources/services.

- Email and password (root user creds. - see below)

- IAM user name and password via the aws console

- Access Keys - Used with the command line interface and progromatically via code

- Key Pairs - Used with AWS specific services

- SSH Keys with CodeCommit

- Server certificates, which can be used to authenticate to some AWS services

- You also can enable Multi Factor Authentication (MFA)

You also have, Security Token Service (STS), which allows you to request temporary, limited-privilege credentials. It's useful in scenarios such as; identity federation, delegation, cross-account access, and IAM roles.

Root User Credentials

When you create your Amazon AWS account you will have to set an email address and a password for your account. This combination is called your, root user credentials. This account gives you unrestricted access to all resources, to include billing.

NO ONE should be using this account for daily operations. If the person claims they need to use this account "to get their job done", and it's not one of these items listed here they should really be fired.

You should also setup multi-factor authentication (MFA) on the root account (really all elevated and critical accounts at a minimum). The MFA token for the root account should then be locked away someplace so it can be used in the case of an emergency.

You could even have the email and password in one location, and the MFA token in another area to ensure, two-man procedures.

AWS gives you a "check box" to follow here once you create an AWS account.

So if you're not supposed to use your root account, then what? Well, the best method is to create a new IAM Group, assign it AdministrativeAccess, create an IAM User, and assign that User to the new group. Log out of the root account, and manage your AWS environment via that new user.

An IAM user with administrator permissions is not the same thing as the AWS account root user.

IAM Users

With AWS, a user, does not have to represent an actual person. It's just an identity. An IAM User may be a service for example. An IAM user is just an identity with credentials and permissions.

If you use IAM service account, they are really no different than service accounts you have been using with active directory.

An IAM user is associated with one and only one AWS account and when you create a user within IAM the user by default cannot access anything in that account.

For example, here is a new user, blogtest. As you can see, there are no permissions assigned to this user. He/she can't do anything.

AWS identifies an IAM User by the following:

- Friendly Name (UserName) - "meh"

- Amazon Resource Name (Arn) - arn:aws:iam::3xxxxxxxxxx8:user/meh

- You use the ARN when you want to uniquely identify the person across all of AWS. For example, if you were writing an IAM policy and wanted to specify this user as a Principal within the IAM policy.

- Unique Identifier (UserId) when you create a user via the API and/or CLI tools

Here is an example:

aws iam create-user --user-name meh

{

"User": {

"UserName": "meh",

"Path": "/",

"CreateDate": "2017-11-09T14:34:07.488Z",

"UserId": "AIDAISW5HE5JJWFML3H6Y",

"Arn": "arn:aws:iam::3xxxxxxxxxx8:user/meh"

}

}

To change a user's name or path, you must use the AWS CLI. IAM does not automatically update policies that refer to the user as a resource.

And example of this is here. You can compare it with the code snippet above. You will see the UserID stayed the same, and the UserName and Arn were updated with, mehmeh.

aws iam update-user --user-name meh --new-user-name mehmeh

aws iam get-user --user-name mehmeh

{

"User": {

"UserName": "mehmeh",

"Path": "/",

"CreateDate": "2017-11-09T14:34:07Z",

"UserId": "AIDAISW5HE5JJWFML3H6Y",

"Arn": "arn:aws:iam::3xxxxxxxxxx8:user/mehmeh"

}

}

You can only have 5,000 users in an AWS account. Likewise, MFA devices are equal to the user quota for the account (5,000). Users can only be a member of 10 groups.

IAM Groups

An IAM Group is a collection of IAM Users.

Groups are a way to more easily manage users. It's recommended that IAM Groups be assigned IAM policies vs. attaching them to specific users. When you assign a user to a particular group, the user automatically has the permissions, which are are assigned to said group.

A group is not an identity. You can not assign a group as a Principal in an access policy. When creating policies, the Principal element is used to specify the; IAM, federated, or assumed-role user.

A group can contain many users, users can belong to many (limit is 10) groups. You cannot nest groups. This means groups cannot contain more groups in a parent/child like relationship hierarchy.

You can only have 300 groups in one AWS account.

You can list your groups via cli:

aws iam list-groups

{

"Groups": [

{

"Path": "/",

"CreateDate": "2017-11-06T18:16:07Z",

"GroupId": "AGxxxxxxxxxxxxxxxxxZ2",

"Arn": "arn:aws:iam::3xxxxxxxxxx8:group/Administrators",

"GroupName": "Administrators"

},

{

"Path": "/",

"CreateDate": "2017-11-08T00:39:37Z",

"GroupId": "AGxxxxxxxxxxxxxxxxxBU",

"Arn": "arn:aws:iam::3xxxxxxxxxx8:group/Billing",

"GroupName": "Billing"

},

{

"Path": "/",

"CreateDate": "2017-11-08T00:41:20Z",

"GroupId": "AGxxxxxxxxxxxxxxxxxAU",

"Arn": "arn:aws:iam::3xxxxxxxxxx8:group/BillingView",

"GroupName": "BillingView"

}

]

}

IAM Roles

A role is intended to be assumable by anyone who needs it. It's not associated to a particular person (as in IAM User). Roles can be temporary, and it can be assigned to federated users who use something other than IAM as their identity provider.

You can also configure federated users using AWS Directory Services. This would be used if you're using Microsoft Active Directory within your current environment and was to allow users to access AWS services and resources.

It also supports SAML 2.0 to provide SSO as well as web identify federation using something like Facebook or Google authentication.

Federated users are not traditional IAM users. They are assigned roles and then permissions are assigned to those roles. Unlike a traditional user, a role is intended to be assumable by anyone who needs it. More on roles later.

Temporary Security Credentials

You can use AWS Security Token Service (STS) to provide trusted users with temporary credentials, that allow them to access AWS resources. These can be used to log into the AWS console, or make API requests.

They are short lived (as in they will expire) access key ID, secret access key and a session token. You can configure the expiration times. Once expired they cannot be reused.

Amazon defines the expiration as, "Credentials that are created by IAM users are valid for the duration that you specify, from 900 seconds (15 minutes) up to a maximum of 129600 seconds (36 hours), with a default of 43200 seconds (12 hours); credentials that are created by using account credentials can range from 900 seconds (15 minutes) up to a maximum of 3600 seconds (1 hour), with a default of 1 hour."

There is no AWS identity associated with STS. It's Global so the credentials will work globally. You can log STS usage via CloudTrail logging.

It's used with Enterprise and Web (Ex. Facebook login) identity federation.

Cross Account Access

Cross account access allows an IAM User from another AWS account to perform actions against another AWS account. This of course is determined by what permissions you allow when setting it up. Deny by default still holds true here.

A good example of this may be, Production and Development. It could also be, APAC and Americas, or Husband and Wife. It's possible to provide third-parties cross-account access as well.

This saves you from not having to create UserProd and UserDev * n users. It reduces the number of IAM Users. It's accomplished via Roles and the IAM User assuming said role to accomplish their task(s).

This could make for interesting lateral movement cases where one AWS account gets compromised (or a third party) and then able to perform actions on the other AWS account via the cross-account access.

It's important to know what cross accounts exist and the level of access allowed against critical systems.

You could also monitor these cross accounts via the AssumeRole API call and then filter by the arn IAM policy name you grated the IAM User(s). Anytime they "Assume" (Ex. aws sts assume-role) that role, it will log it into CloudTrail. You could alert on this for auditing purposes.

Identity & Resource-based IAM Policies

With a identity-based IAM Policy, you're creating (or attaching a built-in) a policy to a user, group or role, permitting the user, group or role to perform a set of actions against a particular resource. For example, listing buckets within S3.

There are also resource-based policies. This is something you would set on the resource. Again, for example, S3 Bucket. You specify what actions are permitted against said resource. They also like you specify who has access to the resource.

Another difference between User-based and Resource-based polices is that the, "who" in a user-based possibly is the user it's attached to. In a resource-based policy the "who can perform actions against me" is detailed within the policy attached to that particular resource.

In a resource-based policy, the Principal element within the JSON specifies the user, account, service, or other entity that is allowed or denied access to said resource.

IAM Policies

To assign permissions to a user, group, role, or resource, you create an IAM policy.

The policies are JSON documents. They use JSON formatting to define the policy. More than one statement can be included in a policy document.

Within this sample policy below we define; Effect, Action, Resource.

You can specify many other Elements for example, Conditions, Principals, etc. You can find them here.

In this sample policy:

- Effect: We are allowing this to happen (it could be Deny)

- By default, access to resources is denied. To allow access to a resource, you must set the Effect element

to Allow. - Action: The actions that are allowed (effect) by this policy. In this example, listing all of my S3 buckets.

- Resource: The resources on which the actions can occur. In my example, everything in s3, hence the *.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:ListAllMyBuckets",

"Resource": [

"arn:aws:s3:::*"

]

}

]

}

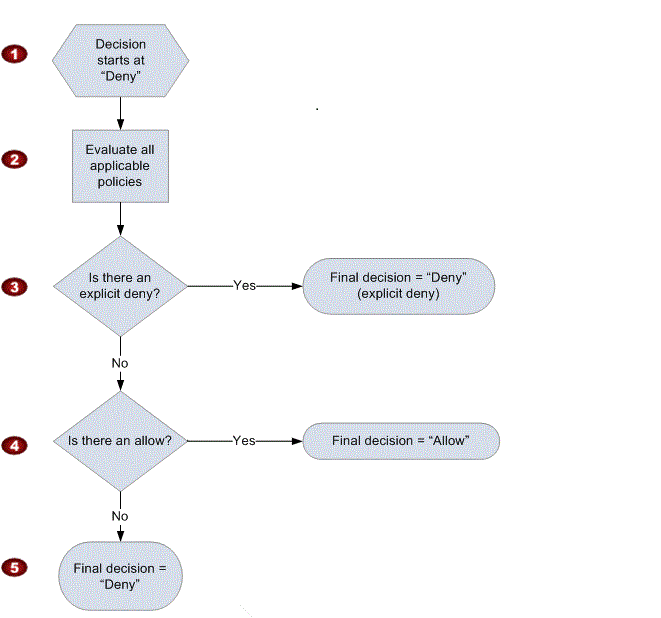

When evaluating a policy, Amazon uses the following IAM Policy Evaluation Logic:

Let's say you created a user and they want to list S3 buckets, but they do not have a S3 policy assigned.

aws s3 --profile s3test ls

An error occurred (AccessDenied) when calling the ListBuckets operation: Access Denied

So I created a mock S3 custom policy to allow a user to ListAllMyBuckets and attached it to user, s3test.

NOTE: Amazon recommends that you use the AWS defined policies to assigned permissions when possible.

Here is the JSON showing what this simple policy looks like.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:ListAllMyBuckets",

"Resource": [

"arn:aws:s3:::*"

]

}

]

}

Now we can attach it to the user, s3test.

Now we can try and rerun the same command we were previously denied. Yay, it works. This is just a test policy to highlight a point. I am not endorsing it for your environment.

aws s3 --profile s3test ls

2017-11-06 17:08:03 polsen-1234

2017-11-06 17:08:33 sysforensics-blogtest

So why this rabbit hole? Well, policies are what give your users permissions.

This is what allows them to do bad things within your environment.

Let's say you want to see what permissions a particular user has attached to them.

Using the aws cli tool, you can do the following:

aws iam list-attached-user-policies --user-name s3test

{

"AttachedPolicies": [

{

"PolicyName": "S3BucketAccessByIAMUser",

"PolicyArn": "arn:aws:iam::31xxxxxxxx48:policy/S3BucketAccessByIAMUser"

}

]

}

This command will only show the policies attached to an IAM User. You need to see if this user is part of a group and if so, what policies is he/she inheriting via that group.

aws iam list-groups-for-user --user-name s3test

{

"Groups": [

{

"Path": "/",

"CreateDate": "2017-11-06T18:16:07Z",

"GroupId": "AGPAxxxxxxxxxxxxxT5Z2",

"Arn": "arn:aws:iam::31xxxxxxxx48:group/Administrators",

"GroupName": "Administrators"

}

]

}

So now you know that s3test belongs to a GroupName called, Administrators. Now we can list the policies attached to that particular group.

So now we know user, s3test has AdministratorAccess.

aws iam list-attached-group-policies --group-name Administrators

{

"AttachedPolicies": [

{

"PolicyName": "AdministratorAccess",

"PolicyArn": "arn:aws:iam::aws:policy/AdministratorAccess"

}

]

}

There will be a lot more on IAM policies in future posts as well. It's the bedrock for AWS.

Summary

I will leave the IAM overview at that. Again, I really suggest you take a look at the IAM user-guide. It's 689 pages. It's very hard to summarize that much information in a single blog post.

Hopefully this gave you a bit of an overview.

I will touch on IAM event logging within AWS CloudTrail in Part III.

Lastly, I will leave you with Amazon's IAM best practices.

- Lock away your AWS Account Root User Access Keys

- Create individual IAM users

- Use AWS Defined Policies to Assign Permissions Whenever Possible

- Use Groups to Assign Permissions to IAM Users

- Grant Least Privilege

- Use Access Levels to Review IAM Permissions

- Configure a Strong Password Policy for Your Users

- Enable MFA for Privileged Users (at a minimum)

- Use Roles for Applications That Run on Amazon EC2 Instances

- Delegate by Using Roles Instead of by Sharing Credentials

- Rotate Credentials Regularly

- Remove Unnecessary Credentials

- Use Policy Conditions for Extra Security

- Monitor Activity in Your AWS Account

Enjoy!