Businesses of all sizes seem to be moving at least some operations to the cloud. It’s only a matter of time before you get a phone call asking you to conduct some kind of cloud forensics and/or incident response.

Why wait for that phone call before you start diving into the "know how" of conducting it. I figured why not explore a few common scenarios over a series of blog posts and take a look at some of the tools and techniques we as analysts can do to make it easier on ourselves when we receive that phone call.

PART I - Snapshot Suspect System

Let's start off with a scenario. Client is running an <insert_purpose> server on Amazon's cloud. It gets compromised, or they think it's been compromised. Let's say they are running; " Microsoft Windows Server 2012 with SQL Server Standard - ami-a09d30c8". It's running default MS SQL - 1433 and RDP - 3389.

It looks something like this:

Image may be NSFW.

Clik here to view.

So you got the call, you signed your statement of work, and you're ready to begin. In parallel we will start with the following:

First, have the client halt what they are doing and have them take a, "snapshot" of the system ASAP. You can do this as follows:

- Go to Instances - Write down the Instance-ID of the system of being compromised. In this case we can see from above it's i-54fecdbf.

- Go to "Volumes" under Elastic Block Store. Write down the Volume ID of the instance id above.

- You can see here in the image that: Instance ID:i-54fecdbf and Maps to Volume ID: vol-2364126b

Image may be NSFW.

Clik here to view.

- Go to Snapshots on the right hand side under Elastic Block Store.

- Click, Create Snapshot and fill out the proper information in the box.

Image may be NSFW.

Clik here to view.

- Now your snapshot will be created in a few mins. Once it's created, right click it, and select, Create Volume

Image may be NSFW.

Clik here to view.

- Now you need to create a Volume from this snapshot. You will need to configure the Type (SSD, Magnetic, etc.), Size of the Volume and then specify the availability zone. The zone should be in the same location as your suspect system. In this case, us-east-1a.

Image may be NSFW.

Clik here to view.

- Now navigate to the Volumes on the left hand side under, Elastic Block Store. You should now see your Volume in there.

Image may be NSFW.

Clik here to view.

- Write down the Volume ID: vol-3f5c2a77

- Now before we do anything else let's move to Part II.

PART II - Analysis System Creation

Now, we have our snapshot and volume created. We do that first for evidence preservation. I skipped memory collection as the real Part I, but i'll get into that in another post. Let's just assume we already collected memory from the live system and now we are moving to analyze the HDD.

We now need to build our analysis system.

Navigate back into your AWS console and then click the, EC2 link under, Compute Networking.

Image may be NSFW.

Clik here to view.

Then click, Launch Instance.

Image may be NSFW.

Clik here to view.

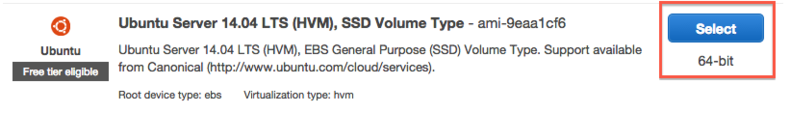

The obvious choice is Ubuntu (Ex. Ubuntu Server 14.04 LTS (HVM), SSD Volume Type - ami-9eaa1cf6). Click, Select then choose your specs. I went with a m3.large, but if this was a real case maybe I would want something larger/more powerful to process/analyze.

Whatever fits your budget. The m3 large is $0.14 an hour pricing so it's very cheap so no need to hold back on specs for a few days work IMO.

Image may be NSFW.

Clik here to view.

When you're launching your Ubuntu analysis system make sure you select the same geographic region. If you see above our SQL server instance is located in us-east-1a, so we will want to have ours be the same. There are some ways around this that i'll get into at the end of the post, but for sake of argument let's just do this.

Image may be NSFW.

Clik here to view.

After this you will need to configure your security keys, and if required (and suggested) lock down the IP addresses allowed to access your new analysis system. I locked down SSH to my remote IP address.

Now you should have something like this with our Forensics Analysis System being the one we just created.

Image may be NSFW.

Clik here to view.

Now we want to log into the analysis system and start configuring it. Do the following - I'm using Linux to ssh into it, but putty, etc. will suffice:

- chmod 400 name.pem

- ssh -i name.pem ubuntu@yourpublicdns

- yes

- sudo apt-get update

- sudo apt-get upgrade

- y

- wget https://raw.github.com/sans-dfir/sift-bootstrap/master/bootstrap.sh

- sudo bash bootstrap.sh -i

- Wait for about 30+ mins.

Installation Complete!

You may get an error when you try running log2timeline.py (EwfFile requires at least pyewf 20131210). I don't know why, but just do the following to get it working again:

sudo apt-get autoremove libewf2 (not sure if that's 100% solution, but it worked)

So yeah, congrats. You now have a functional SIFT 3.0 analysis system in the Amazon EC2 cloud. Let's go ahead and create an AMI from it.

- Go to Instances on the left hand side.

- Right click the instance you want to create an image from.

Image may be NSFW.

Clik here to view.

- Configure the settings and create it. If you want to add additional storage go ahead, but I choose to do so when I need it vs. pre-allocating it.

Image may be NSFW.

Clik here to view.

- Now if you go into AMIs you will see your image. This is pretty much like having a deployable SIFT 3.0 image in the cloud ready at anytime.

PART III - Analysis

Ok, so now we have our snapshot of the suspect system and it's respective volume. We also have our analysis system and we have created an AMI of our SIFT 3.0 analysis system so we can revert to a clean image if/when we want. Do the following:

- Navigate to Volumes under Elastic Block Store (left hand side).

- Right click our Volume from earlier (vol-3f5c2a77) and click, Attach Volume.

Image may be NSFW.

Clik here to view.

- Click, Attach

Ok, now it's attached. Let's SSH back into our analysis system.

- ssh -i name.pem ubuntu@yourpublic_dns

- sudo fdisk -l

- Disk /dev/xvdf: 53.7 GB, 53687091200 bytes

- sudo md5sum /dev/xvdf

- 55342cdb2fb7d3787648a7e11bb3156c

You could do a couple things here. You could DD the image to another attached volume and have Amazon ship it to you, download it, or you could simply mount it and analyze it in the cloud. As long as you can prove the integrity I see no issues analyzing it in the cloud. It's also cheaper.

Mounting the Drive

- mkdir suspect_mount2

- sudo mmls /dev/xvdf

Units are in 512-byte sectors

Slot Start End Length Description

00: Meta 0000000000 0000000000 0000000001 Primary Table (#0)

01: ----- 0000000000 0000002047 0000002048 Unallocated

02: 00:00 0000002048 0000718847 0000716800 NTFS (0x07)

03: 00:01 0000 718848 0104855551 0104136704 NTFS (0x07)

04: ----- 0104855552 0104857599 0000002048 Unallocated

512 * 718848 = 368050176 (system partition)

- sudo mount -o ro,noexec,showsysfiles,loop,offset= 368050176 /dev/xvdf suspect_mount2

- ls suspect_mount2

Image may be NSFW.

Clik here to view.

Building a Timeline

- sudo log2timeline.py -o 718848 suspect.dump /dev/xvdf

- py -z UTC suspect.dump suspect_timeline.csv

- Timeline: suspect_timeline.csv

So at this point you can pretty much do what you want with the system. It's mounted as read only.

Reference

SANS DFIR Slides - Incident Response and Forensics in the Cloud w/ Paul Henry